Every time I hear or read about the UK’s potential for AI there’s a continued implicit theme - the UK cannot compete at the cutting edge of AI. The AI Opportunities Plan is more ambitious with the government commitment to establish UK Sovereign AI. But during conversations and listening to events, leaders tend to set expectations lower, suggesting that the UK being a user of AI or at best develop small models rather than a leader. There are significant limitations that hold the UK back from the AI frontier including immense computational requirements, energy resources, and specialised AI talent. It seems that many are implicitly concluding that the UK simply doesn't have the capacity to be active at this level.

This assumption needs to be challenged, the field is rapidly evolving and far from settled. Challenging assumptions can lead to surprising progress. For example, models like DeepSeek shocked many, it demonstrated cutting edge AI can be built with far lower resource requirements than previously believed necessary. However, Deepseek wasn’t enough to shake our national self-doubt. There are reasons to believe the UK could be a major AI ecosystem creator. Yes, the UK has economic and growth challenges but the it is also the 6th largest economy. Not only is the UK a large economy, but also has world-leading research institutions, growing AI talent, and unique datasets! Well placed to be at the frontier and develop the tools and capabilities to be embedded in the AI supply chain and lead AI development.

At the beginning of May, there was a fantastic demonstration of the potential for the UK to be a leader in AI. But can but can it dislodge this pessimism? On 7th May, Foresight AI was announced, a generative AI large language model trained on the NHS data of 57 million people – equivalent to the population of England. It’s a unique model yet to be evaluated and benchmarked, so we don’t know how capable it is. But it’s undeniably a unique AI model capable of benefiting many who use the NHS and that’s only possible because of: our health data ecosystem, AI research and skills and access to compute. Foresight shows there UK can develop truly innovative AI.

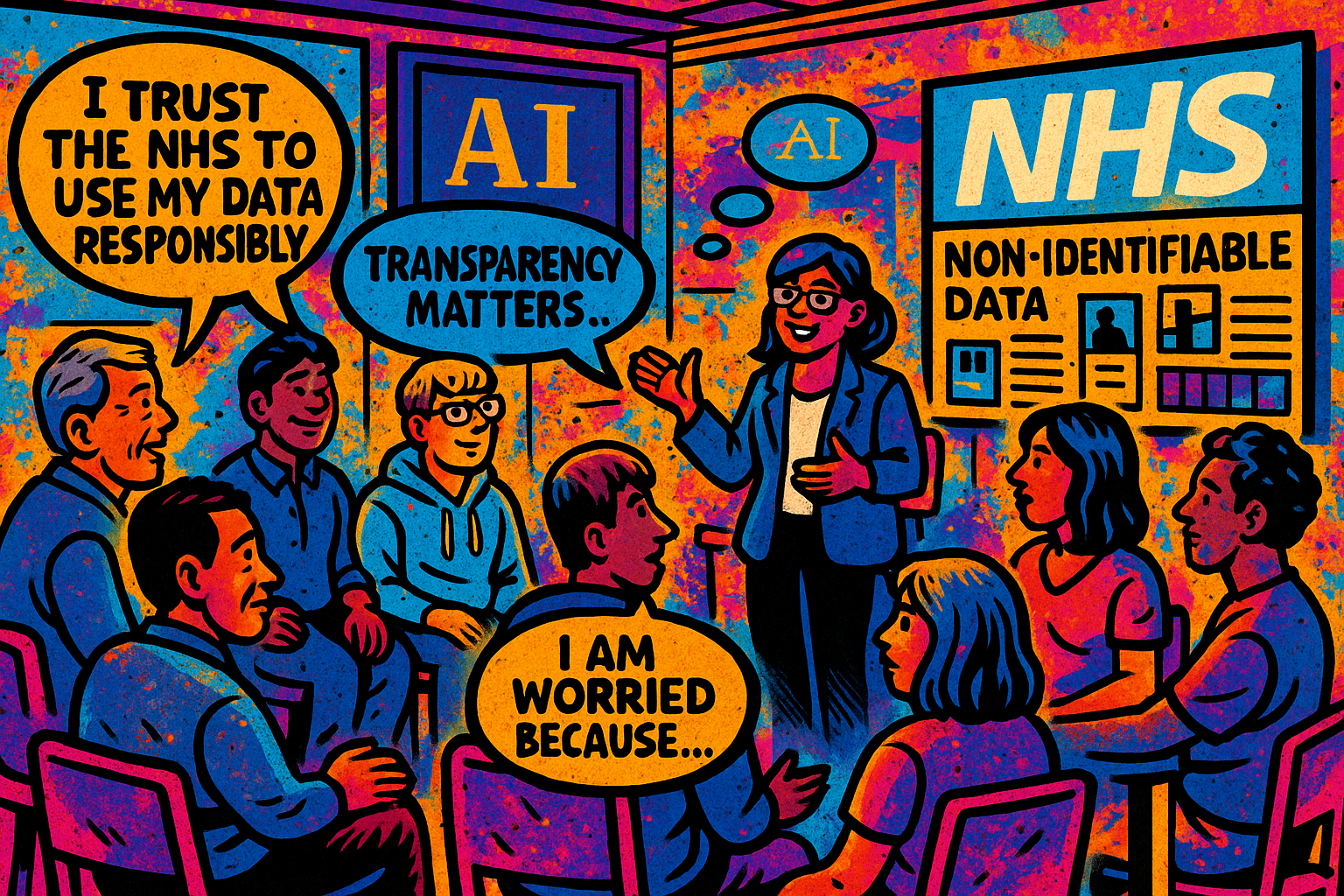

And yet, instead of recognition and celebration of this achievement there is significant amounts of commentary focusing on concerns. These concerns tend to focus on data governance and privacy which are absolutely valid. Public trust is fragile and precious and should not be squandered. But it's equally important to acknowledge the potential benefit of this achievement. It is the creation of a generative AI model at scale that uses NHS data stripped of identifiable information. It is a demonstration of the UK leveraging its capabilities be at the AI frontier.

The main concerns raised relate to the risks of 1) de-identified still has a finite chance or re-identification - how likely this is remains unknown; 2) the model might restate medical information used in training – but it might not, or it might be very unlikely, we don’t know 3) transparency to patients and control.

All valid concerns. But we should also look at what the significant public engagement on NHS data use tells us:

- The large amounts of the public agree with NHS using data for improving own care (94%), the care of others (89%), and working with companies to use patient data to improve care (73%).

- The public expect improvement in health to be prioritised over financial incentives and to benefit everyone.

The public have particular expectations when data is to be used:

- Strict rules so data cannot be passed to third parties

- Removing identifying information from data before it is accessed

- Sanctions and fines if companies misuse data

- Secure storage for data.

And in particular an expectation to be informed “Transparency, honesty, a flow of information to and from the patient, and ensuring that the system works as described”

This existing public attitudes and engagement research shows the NHS and partners have a high degree of trust to use data to benefit everyone. The safeguards in developing Foresight align with public expectations: de-identified data, the model is in a secure server and there’s limited access. The use of the data hasn’t had explicit patient consent but is consistent with GDPR rules. The communication and transparency of data use should be improved.

Of course, trust isn’t monolithic. It varies between communities, and there are legitimate concerns that need to be understood and addressed. But the unasked question is: do we only move at the pace of the lowest level of trust? Or do we progress at the frontier of consensus, where the majority of support is, while continuing to engage with and listen to those who remain unsure?

What is needed next is to better understand the benefits and risks in order to continue to meaningfully engage with the public. This means benchmarking the model, understanding its capabilities, evaluating the risk of information leaking, and have an informed conversation with the public ensuring engagement with low-trust communities. This allows us to step out of the realm of speculation and into evidence-based discourse.

We don’t build trust by standing still, which can disenfranchise people; we build trust by moving carefully and with transparency at the frontier, bringing both innovation and accountability together responsibly.

Foresight shouldn’t be the only model developed, there also needs to be more leading-edge AI development. But let’s begin by doing what should come naturally when something extraordinary is achieved: lets celebrate and be bold in our belief to do more!

Enjoyed this post? Do share with others! You can subscribe to receive posts directly via email.

Get in touch via Bluesky or LinkedIn.

Transparency on AI use: GenAI tools have been used to help draft and edit this publication and create the images. But all content, including validation, has been by the author.