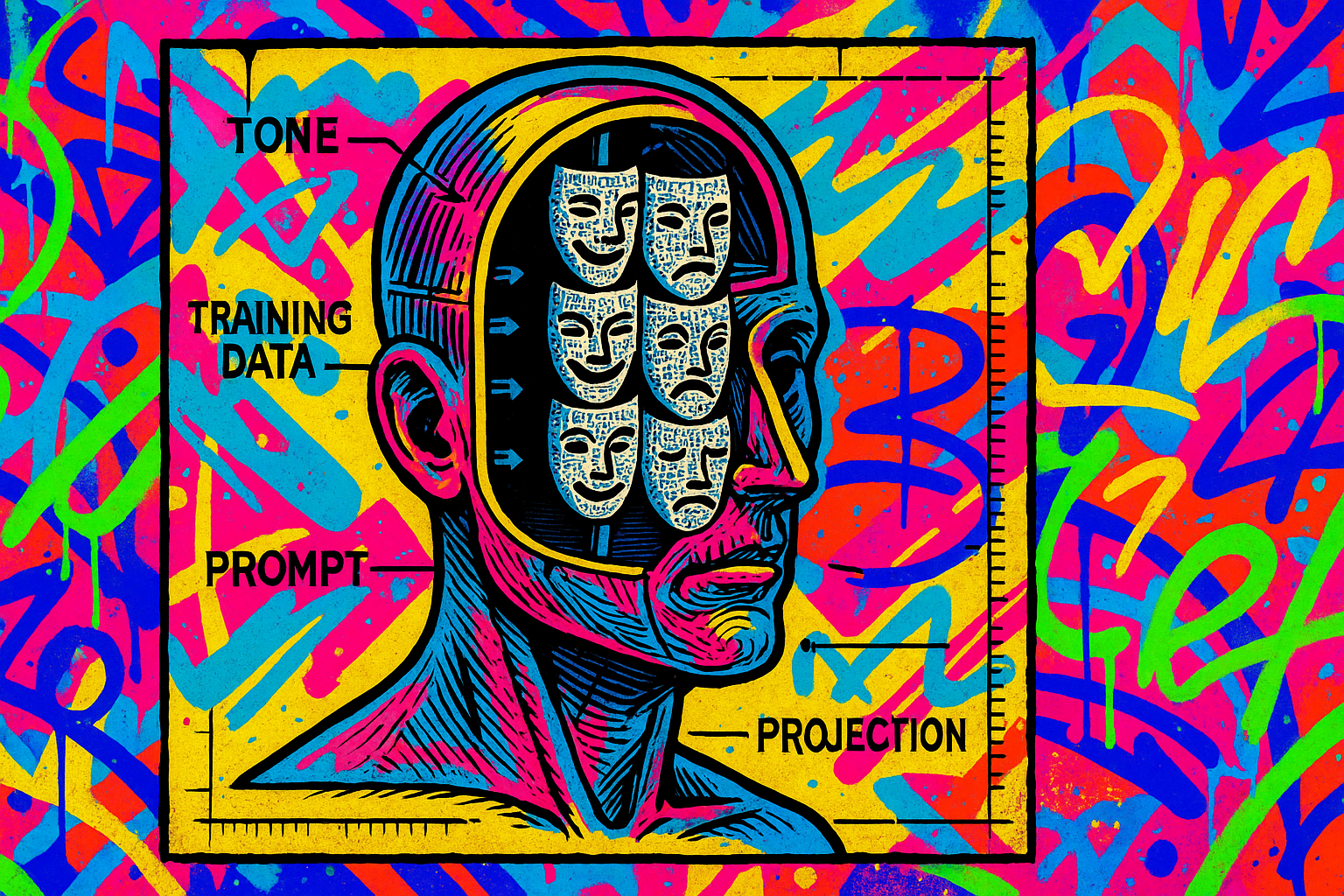

AI has personality! Or to be more accurate AI can generate sequences of words in text and audio that give the impression of distinct personality traits (less attention grabbing!). At first, these traits emerged unintentionally from a combination of the training data and the AI development techniques. Today, personality is intentionally part of the design process for many generative AI models. It’s not just about the technology and data there’s also a user dimension. The words used to prompt the AI in combination with our natural tendency to project human qualities onto responses, leads us to read tone and character where there may be none. This natural tendency is amplified in the present day of email, messaging and social media where the default has become believing text interactions are with other people. What do see is as a result of AI personality traits people form an affinity to AI. The launch of GPT-5, for example, saw a group of users complaining about the loss of GPT-4’s personality. There is also a more concerning side where people become emotionally dependent on AI.

We shouldn’t think of affinity as unimportant, at the most basic level it means someone likes the experience of using a technology and so it’s more likely to be used again. Affinity for technology influences how individuals interact with digital services.

Healthcare globally is expected to see increasing amounts of AI usage. The national ambitions outlined in the NHS 10 Year Plan set out a vision where AI is used frequently by patients and staff. In fact NHS is already embedding AI in healthcare services to follow up with patients’ post-surgery, answer routine queries online and by phone, and more. There's increasing use of AI for health in many countries including US, Australia, China and Singapore.

Does AI Personality Matter in Healthcare?

Consumer technology leaders have recognised that AI personality traits are an essential design step to crafting approachable, relatable, and consistent AI experiences. The intention isn’t to trick customers into thinking they’re speaking to a human, but to build trust and comfort. They are incorporating designing AI personality traits as a part of their AI development.

While consumer sectors and healthcare are very different the need to build trust and usability remain common factors. For healthcare systems like the NHS, being able to provide timely and accessible information is important. While the evidence base is still developing research does indicate that AI personality traits are a factor in successfully providing health information. The implication is designing AI with the right personality traits is essential to create accessibility and trust in healthcare AI.

If an AI tool communicates in a way that is relatable and appropriate it’s more likely to be used and trusted. An AI tool which can incorporate diverse communication styles and cultural contexts is likely to be more inclusive. Work on social robots shows that digitally excluded people with a wariness towards technology are less anxious about technology use if it is socially familiar. A patient managing a stigmatized condition may prefer an AI that feels like a peer or a personality trait that feels less judgemental. Neurodivergent users may prefer literal, structured interactions.

There may be no single preferred AI personality trait for all patients or even for all contexts. Supporting patients to choose AI personalities that feel right for them could empower users, increase engagement, and strengthen inclusion. Which in turn could improve the effectiveness of AI to inform, change behaviour, support and motivate.

Challenges and Ethical Considerations

Yet, the introduction of AI with distinct personality traits is not without risks. While personality traits can make AI feel consistent, it also risks AI responding in unexpected or inappropriate ways. Users may overestimate the AI's capabilities, leading to misplaced trust or even dependency. There is also the danger of reinforcing gender stereotypes or cultural biases, especially as AI assistants often default to female names and voices.

Evolving Skills and Professions

Much has been said about AI replacing jobs, but less attention has been paid to the new skills and professions AI will create demand for. AI development will undoubtedly require more coders and AI software engineers. But the crafting of AI personalities could mean a demand for much broader skills. Microsoft and Google have employed poets, playwrights, comedians, and fiction writers to help develop the personality traits for their AI tools. What is needed to develop robust, reliable and relatable personality traits that represent the organisation or context is currently unclear.

AI personality traits are likely to an essential property for good human-AI interactions, but there are so many questions that still need to be answered!

Unanswered Questions

There is much we do not yet understand about AI personality traits and human interactions. Which AI personalities help, and which hinder, healthcare experience and outcomes? How do preferences vary across clinical contexts, patient groups, and cultures? The consumer sector will continue to have valuable lessons to offer healthcare, but research and real-world testing are sorely needed to identify best practice for health and care.

As AI becomes mainstream in healthcare personality traits should be an essential design element to create accessible and inclusive AI enabled healthcare capabilities.

I hope you enjoyed this post, if so please share with others and subscribe to receive posts directly via email.

Get in touch via Bluesky or LinkedIn.

Transparency on AI use: GenAI tools have been used to help draft and edit this publication and create the images. But all content, including validation, has been by the author.